Sidebar

start

Introduction

GMDH Shell for Data Science uses the power of the GMDH (Group Method of Data Handling - http://www.gmdh.net/), as well as many other classic methods and algorithms to facilitate decision making in a variety of application domains.

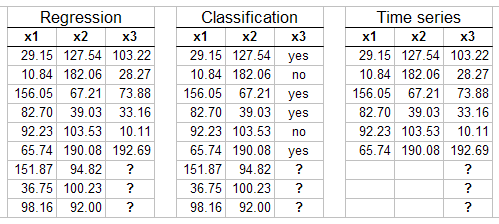

When a new dataset is imported GMDH Shell pops-up a list of preconfigured templates allowing you to choose between Regression, Classification and Time Series Forecasting tasks. The following examples should help identify your modeling task:

start.txt · Last modified: 2021/06/01 03:27 (external edit)